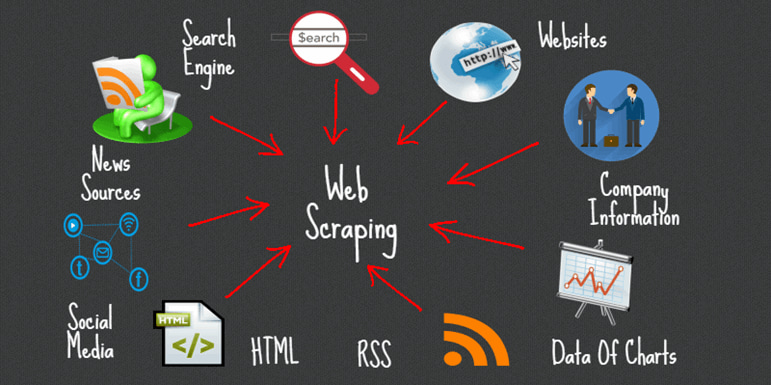

Im trying to do the pagination webscraping using beautifulSoup, so I used the webdriver to paginate to the other pages. However I don't really sure any other way to get content from a dynamic web page using webdriver and to match with my code. Below is the full code I tried to implement the webdriver but the webdriver is not working. Web Scraping is a tool for automating the collection of data or building datasets for analysis and modelling. If you are looking forward to mining data on the internet in your job or to start a business that applies this tool, this course will bring more light on how to do it.

| Original author(s) | Leonard Richardson |

|---|---|

| Initial release | 2004 |

| Stable release | |

| Repository | |

| Written in | Python |

| Platform | Python |

| Type | HTML parser library, Web scraping |

| License | Python Software Foundation License (Beautiful Soup 3 - an older version) MIT License 4+[1] |

| Website | www.crummy.com/software/BeautifulSoup/ |

Beautiful Soup is a Python package for parsing HTML and XML documents (including having malformed markup, i.e. non-closed tags, so named after tag soup). It creates a parse tree for parsed pages that can be used to extract data from HTML,[2] which is useful for web scraping.[1]

Beautiful Soup was started by Leonard Richardson, who continues to contribute to the project,[3] and is additionally supported by Tidelift, a paid subscription to open-source maintenance.[4]

It is available for Python 2.7 and Python 3.

Advantages and Disadvantages of Parsers[edit]

This table summarizes the advantages and disadvantages of each parser library[1]

| Parser | Typical usage | Advantages | Disadvantages |

|---|---|---|---|

| Python’s html.parser | BeautifulSoup(markup, 'html.parser') |

|

|

| lxml’s HTML parser | BeautifulSoup(markup, 'lxml') |

|

|

| lxml’s XML parser | BeautifulSoup(markup, 'lxml-xml') |

|

|

| html5lib | BeautifulSoup(markup, 'html5lib') |

|

|

Release[edit]

Beautiful Soup 3 was the official release line of Beautiful Soup from May 2006 to March 2012. Free adobe download for mac. The current release is Beautiful Soup 4.9.1 (May 17, 2020).

You can install Beautiful Soup 4 with pip install beautifulsoup4.

See also[edit]

References[edit]

- ^ abc'Beautiful Soup website'. Retrieved 18 April 2012.

Beautiful Soup is licensed under the same terms as Python itself

CS1 maint: discouraged parameter (link) - ^Hajba, Gábor László (2018), Hajba, Gábor László (ed.), 'Using Beautiful Soup', Website Scraping with Python: Using BeautifulSoup and Scrapy, Apress, pp. 41–96, doi:10.1007/978-1-4842-3925-4_3, ISBN978-1-4842-3925-4

- ^'Code : Leonard Richardson'. Launchpad. Retrieved 2020-09-19.

- ^Tidelift. 'beautifulsoup4 | pypi via the Tidelift Subscription'. tidelift.com. Retrieved 2020-09-19.

APIs are not always available. Sometimes you have to scrape data from a webpage yourself. Luckily the modules Pandas and Beautifulsoup can help!

Related Course:Complete Python Programming Course & Exercises

Web scraping

Pandas has a neat concept known as a DataFrame. A DataFrame can hold data and be easily manipulated. We can combine Pandas with Beautifulsoup to quickly get data from a webpage.

Beautiful Soup For Web Scraping Tools

If you find a table on the web like this:

Beautiful Soup Web Scraping Pdf

We can convert it to JSON with:

And in a browser get the beautiful json output:

Converting to lists

Rows can be converted to Python lists.

We can convert it to a dataframe using just a few lines:

Pretty print pandas dataframe

You can convert it to an ascii table with the module tabulate.

This code will instantly convert the table on the web to an ascii table:

This will show in the terminal as: